Overview

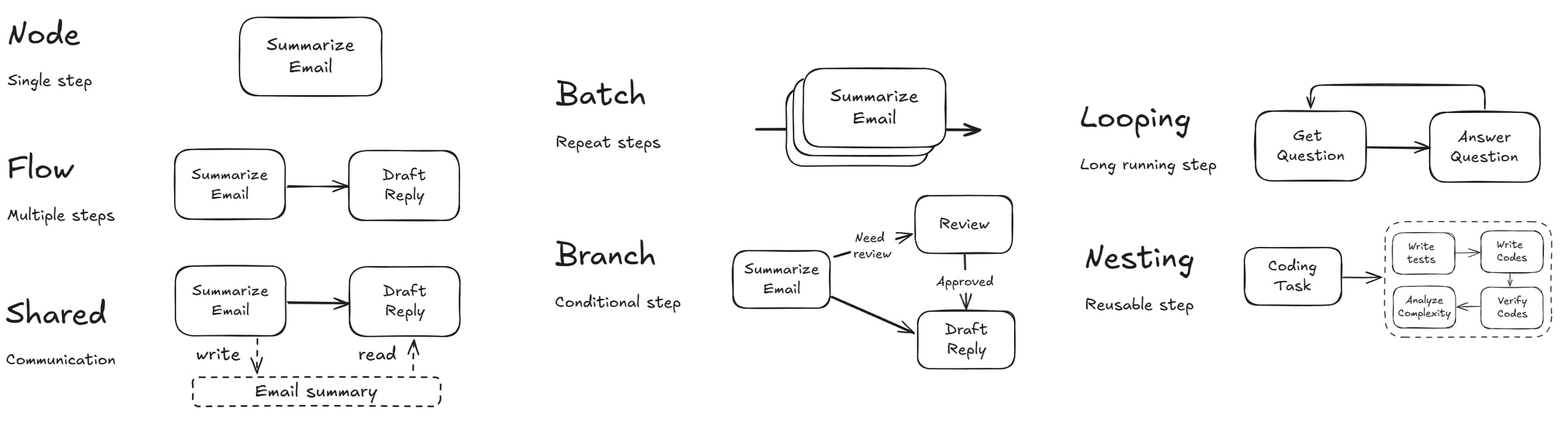

Caskada is built around a simple yet powerful abstraction: the nested directed graph with shared store. This mental model separates data flow from computation, making complex LLM applications more maintainable and easier to reason about.

Core Philosophy

Caskada follows these fundamental principles:

Modularity & Composability: Build complex systems from simple, reusable components that are easy to build, test, and maintain

Explicitness: Make data dependencies between steps clear and traceable

Separation of Concerns: Data storage (shared store) remains separate from computation logic (nodes)

Minimalism: The framework provides only essential abstractions, avoiding vendor-specific implementations while supporting various high-level AI design paradigms (agents, workflows, map-reduce, etc.)

Resilience: Handle failures gracefully with retries and fallbacks

The Graph + Shared Store Pattern

The fundamental pattern in Caskada combines two key elements:

Computation Graph: A directed graph where nodes represent discrete units of work and edges represent the flow of control.

Shared

MemoryObject: A state management store that enables communication between nodes, separatingglobalandlocalstate.

This pattern offers several advantages:

Clear visualization of application logic

Easy identification of bottlenecks

Simple debugging of individual components

Natural parallelization opportunities

Key Components

Caskada's architecture is based on these fundamental building blocks:

The basic unit of work

Clear lifecycle (prep → exec → post), fault tolerance (retries), graceful fallbacks

Connects nodes together

Action-based transitions, branching, looping (with cycle detection), nesting, sequential/parallel execution

Manages state accessible during flow execution

Shared global store, forkable local store, cloning for isolation

How They Work Together

Nodes perform individual tasks with a clear lifecycle:

prep: Read from shared store and prepare dataexec: Execute computation (often LLM calls), cannot access memory directly.post: Process results, write to shared store, and trigger next actions

Flows orchestrate nodes by:

Starting with a designated

startnode.Following action-based transitions (driven by

triggercalls inpost) between nodes.Supporting branching, looping, and nested flows.

Executing triggered branches sequentially (

Flow) or concurrently (ParallelFlow).Supporting nested batch operations.

Communication happens through the

memoryinstance provided to each node's lifecycle methods (inprepandpostmethods):Global Store: A shared object accessible throughout the flow. Nodes typically write results here.

Local Store: An isolated object specific to a node and its downstream path, typically populated via

forkingDataintriggercalls.

Getting Started

If you're new to Caskada, we recommend exploring these core abstractions in the following order:

Node - Understand the basic building block

Flow - Learn how to connect nodes together

Memory - See how nodes share data

Once you understand these core abstractions, you'll be ready to implement various Design Patterns to solve real-world problems.

Last updated